Elon Musk recently joked that the reason that the James Webb telescope experienced delays because the computers were busy trying to render what the planets looked like, since the programmers who created the simulation we’re currently living in failed to anticipate that we humans would have been able to see these stars. It’s a fun joke that many are starting to take seriously. AI is now generating physics realistic enough to fool the average person. So if AI is doing it, why couldn’t the simulator we live in do it?

While I don’t take the simulation hypothesis very seriously, I have a fun piece of empirical evidence that also indicates it’s true.

Much of the art that AI is generating these days is impressive, but the most impressive stuff is with physics and animal simulations. Human image simulation is going to be more difficult to simulate because we Merge (sharing in linguistic signals) with people differently than we do with animals and the environment. Disney made the whites of animated animals’ eyes bigger and bigger over time because kids Merge with animals better, as we grammarize the model’s intent when its sclera are white.

Actual animal eyes are nothing like this and so we’re less capable of Merge with them. Compare to an actual deer:

It’s interesting to compare early cartoon characters which had more realistic, beadier animal eyes, like Oswald the Lucky Rabbit:

Aside from eye sclera being different, we don’t Merge with animals as we with humans because our neural blueprints are so different. Animals have no ROBA, but we do. Animals cannot grammarize back on us, though they might try. And yet animations can convince us otherwise. Such anthropomorphizing is normal and expected in any society, but when kids Merge with what are essentially non-merge-able species, then odd things start happening. (I’m specifically talking about a recent phenomenon which begins with an f and has two rs in it.)

At any rate, this isn’t about Disney or whatever it’s doing to society. The broader issue is that rendering humans is more difficult because humans are so Merge-able. Every gesture means something, and every way we respond means something back. We love this stuff and it’s the life of human culture. It’s therefore difficult, if not impossible, to render these things with total realism. Animators have known for a long time that it’s easy to make likable characters by exaggerating stereotypical gestures, like the rolling of the eyes or a big sigh. These can be animated such that the viewer knows the exact intent of the model.

(Note also how Disney has been gradually increasing the length of anticipation time before actions. In my 5 Stages of Movement video I detailed how these can be fiddled with to make a move snappier or more aesthetic. In Snow White 1937, animations are snappier. In Robin Hood 1973, antics before the big action are elongated. Compare to Cars 3 2017 where every action has a smooth anticipation, made easier thanks to computers where you can just move the Bézier curve at will. Animation producers probably think this has some kind of subtle, soothing effect on kids. I can only assume this is the reason behind such antic-inflation. It’s not universal, and it will be shortcut for effect, but in general the trends have been toward a more aesthetic, smoother anticipation in animation. I wonder if kids raised on these kinds of animations have more exaggerated anticipations as a result, which might be an effort to clue their friends as to what their real emotions are.)

The audience can Merge relatively easily with an animated character provided the animator and the viewer agree on what these signals mean. A sigh must mean some kind of release, an eye roll must mean some kind of frustration. The rest of the body can be drawn into this affective response. It need not be realistic at all. The exaggerated movement is all that’s needed.

Once the animator tries to animate realistic people, they enter the uncanny valley. The valley was wide in early animation. Nobody dared enter it. But these days, thanks to the likes of Avatar and Metahumans, it’s more like the Uncanny Gorge, short in distance but infinitely deep. The more realistic the render, the better the animator must be. It’s not enough to make a Metahuman and assume people will Merge with it: better animation is now required. If you can’t afford a team of the best animators in the world, don’t use Metahumans. You can still get away with exaggerated stick figures like South Park does.

Once you try to apply AI to human animation, things get even more difficult. AI can’t Merge with humans. They’re not in that business anyway as they’re a step removed from the animation team. The animator will likely have to fight against whatever the AI renders. A sigh might look more like a heavy breath. Eye rolls might appear ghastly or too segmented from the rest of the body. You can theoretically make an inverse-kinematics system that “trains” the AI to make the rest of the body and face conform to any gesture, but it will never actually be Merging with the audience. The audience will always be watching something that’s slightly off. They just might not know why.

We’re only talking about human emotion. What about human action? Perhaps AI will figure it out, but so far it’s hardly human. It’s physically IK-correct, but there’s no character. You could write a “character” into the bumbling Nvidia armored rig and AI would give stereotyped affects as a result. But it’s not Merge. Always a step removed.

What about human interaction? Are we to expect that Metahumans will be able to realistically communicate with a viewer through AI? Are we expected to believe that they can be therapists in the future? No doubt they are trying. I don’t anticipate this will be successful any time soon. AI is not actual intelligence, not actual counseling, because it’s run through guardrails and data curation. It can’t Merge. The programmers Merge with the client, but through a medium that is hardly designed anymore.

None of this is to say that AI-generated humans will never appear realistic. This isn’t the point. The point is simply that human behavior is incredibly difficult, I believe impossible, to model and replicate. Behaviorists tried and failed. Innate-aggressionists tried and failed.

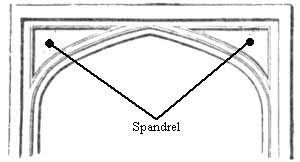

Why have they failed? Because scientists, modelers, theorists etc. have failed to account for the simple fact that human behavior is mechanically different in some domains than that of every other living being. In the case of combat, humans alone are capable of recursive, object-based aggression (ROBA). It’s most likely that ROBA springs from the spandrel (an architectural gap) which once laid dormant between the modules of object-usage and combat in the brain, but once suddenly connected produced a new module: ROBA. I believe this new module-from-spandrel is the best candidate for being the module for grammatical language.

And yet, for some odd reason, nobody in thousands of years (maybe since Hesiod) has been able to identify this unique mechanical difference between humans and animals. Perhaps the designers of our simulation said, “Hey boss we finally found a way to get language going, but get this: you have to connect their object usage with combat. Somehow that gives these guys language.” The boss replies, “Great, ship it, but program a guardrail against anyone noticing that their combat is somehow different from animal combat. In fact, send some kind of weird thought kernel into the system so they’ll be convinced that humans are exactly like everything else in the universe. This way they can’t exploit the spandrel to find us and get out.”

This spandrel got language going and it was running smoothly and nobody noticed. Nobody said *anything* about it. In fact, people said everything about everything else, except the fact that humans have ROBA, and animals do not.

If I put my tin foil hat on, I might predict that my theory will be particularly unpopular or undergo severe attack because it’s correct and the simulation computers simply aren’t ready for its full implications.

But I don’t believe the simulation hypothesis, because if I’m right, then I shouldn’t have been allowed to discover this novel fact, I shouldn’t be allowed to publish a book about it, and you shouldn’t be allowed to be convinced by it. So far I’ve not found these conditions to be true (book is forthcoming). Further, the application of Merge to novel things like coffee, donuts, and Vaporwave should probably trigger more glitches than they do. Instead it’s always more and more fascinating.

… Or… whoever designed us to have language *wants* us to Merge so we can go beyond the created environment and develop some kind of fellowship with the creator.